Introduction

Supercomputers have long been at the forefront of scientific research, weather forecasting, data analytics, and a myriad of other applications that demand immense computational power. They are the workhorses that tackle complex simulations, deep learning, and the most data-intensive tasks. At the core of these computational marvels lie two key components: the Central Processing Unit (CPU) and the Graphics Processing Unit (GPU). In this blog post, we will delve into the intricacies of CPU and GPU architecture, exploring their roles and how they collaborate to perform mind-boggling feats of calculation.

The Central Processing Unit (CPU)

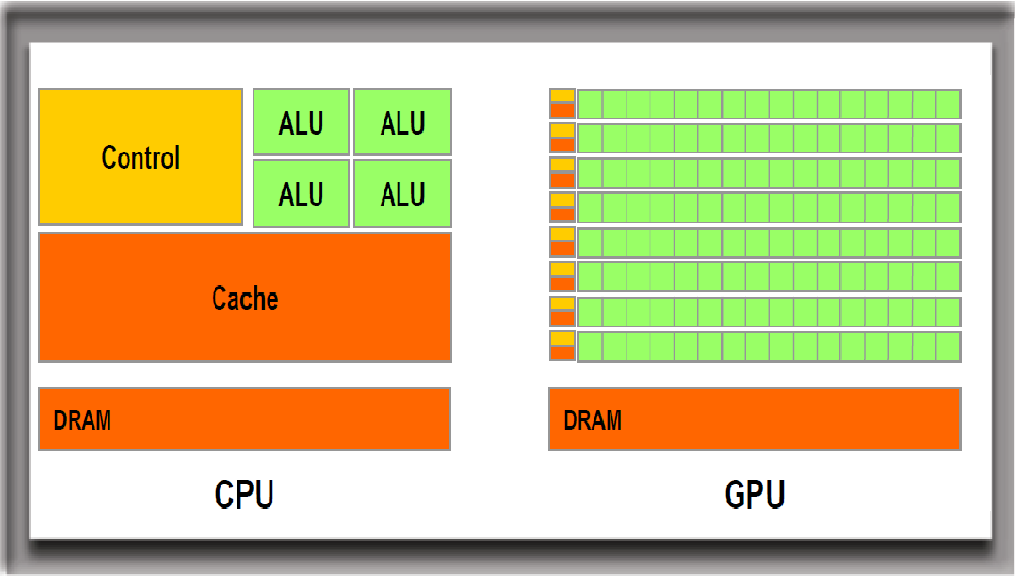

The CPU is often referred to as the “brain” of a computer. It’s responsible for executing instructions and performing general-purpose computing tasks. Modern CPUs are incredibly powerful, with multiple cores and sophisticated microarchitectures. These cores are adept at handling a wide range of tasks, but they excel at sequential processing.

The architecture of a CPU can be broken down into several key components:

Control Unit: This component is responsible for fetching instructions from memory, decoding them, and controlling the execution of those instructions. It coordinates the operation of other CPU components.

Arithmetic Logic Unit (ALU): The ALU performs arithmetic and logic operations, including addition, subtraction, AND, OR, and more. It’s the part of the CPU responsible for actual computation.

Registers: Registers are small, high-speed storage locations within the CPU. They store data that is currently being operated on, and they provide fast access for the CPU.

Cache: CPUs have multiple levels of cache memory, which serve as a bridge between the CPU and main memory (RAM). These caches store frequently used data and instructions to reduce memory access times.

Pipeline: Modern CPUs often employ pipelining, which allows them to execute multiple instructions in parallel stages. This enhances performance by overlapping the execution of instructions.

The Graphics Processing Unit (GPU)

GPUs, on the other hand, are designed for parallel processing and excel at handling massive amounts of data simultaneously. While they are commonly associated with rendering graphics for video games and movies, GPUs have found new life in fields like scientific computing, artificial intelligence, and machine learning.

A GPU’s architecture differs significantly from that of a CPU. Here are some key features:

Streaming Multiprocessors (SMs): A GPU consists of multiple SMs, each containing a group of CUDA cores. These SMs are capable of performing parallel processing on a large scale, making GPUs particularly well-suited for tasks like deep learning.

Global Memory: GPUs have their own memory hierarchy, which includes global memory. This memory is larger but slower than the cache memory found in CPUs. It’s used to store data that can be accessed by all threads within a GPU.

Texture and Render Units: These units handle the rendering and display aspects of GPUs. They are crucial for gaming and graphics-related tasks.

Memory Hierarchy: In addition to global memory, GPUs have shared memory and local memory. The use of these different types of memory depends on the nature of the task.

The Synergy Between CPU and GPU

While CPUs and GPUs have distinct architectural differences, they are often used together to leverage the strengths of both for maximum computational power. This collaboration is most evident in supercomputers and high-performance computing (HPC) clusters.

CPUs are used for tasks that require sequential processing and complex decision-making. They can efficiently manage the overall flow of a program and are responsible for tasks like data pre-processing, system management, and directing the GPU’s workload. In contrast, GPUs handle tasks that can be parallelized, such as matrix multiplication, deep learning training, and complex simulations.

One of the key components that enables CPU-GPU synergy is the PCI Express (PCIe) bus. This high-speed data transfer interface connects the CPU and GPU, allowing them to exchange data quickly. It’s the bridge that enables seamless cooperation between these two processing powerhouses.

Supercomputing in Action

Now that we understand the architecture of CPUs and GPUs, let’s look at how they come together in supercomputing to tackle some of the most challenging problems in science and industry.

Climate Modeling: Supercomputers are indispensable for simulating climate patterns and predicting weather events. CPUs handle the intricate calculations involved in climate models, while GPUs accelerate the visualization and rendering of these simulations.

Drug Discovery: In the field of pharmaceuticals, supercomputers equipped with GPUs can quickly analyze massive datasets to identify potential drug candidates. This acceleration is crucial for speeding up the drug development process.

Astronomy and Astrophysics: Understanding the cosmos requires the processing of vast datasets. CPUs manage data analysis and control, while GPUs assist in the rapid processing of images and simulations.

Artificial Intelligence and Machine Learning: The surge in AI and machine learning applications has driven the adoption of GPUs in supercomputing. They excel at training deep neural networks, a task that benefits immensely from parallel processing.

Challenges in Supercomputing

While the collaboration between CPUs and GPUs in supercomputing is highly effective, it also presents challenges. One major issue is the need for efficient programming models and software that can fully utilize the potential of both CPU and GPU architectures.

Developers must create algorithms that can distribute workloads effectively between CPUs and GPUs, ensuring that both are fully utilized. This requires a deep understanding of the unique capabilities of each component.

Additionally, power consumption and heat dissipation are concerns in supercomputing. CPUs and GPUs can generate a significant amount of heat when operating at full capacity. Supercomputer facilities must implement advanced cooling solutions to maintain optimal operating conditions.

The Future of Supercomputing

As technology continues to advance, the synergy between CPUs and GPUs is expected to become even more pronounced. The demand for high-performance computing in fields like artificial intelligence, quantum computing, and complex simulations will drive the development of more powerful and efficient CPU-GPU combinations.

New architectures and specialized processors, such as tensor processing units (TPUs), are also emerging, further expanding the toolkit for supercomputing. The future holds the promise of even more impressive computational capabilities, allowing researchers and scientists to tackle previously insurmountable challenges.

Conclusion

In the world of supercomputing, the partnership between Central Processing Units (CPUs) and Graphics Processing Units (GPUs) is akin to a dynamic duo that combines sequential processing power with parallel processing prowess. This collaborative effort has unlocked the potential for solving complex problems across various fields, from scientific research to AI and beyond.

Understanding the architecture and capabilities of CPUs and GPUs is crucial for harnessing their full potential. The future of supercomputing holds exciting possibilities, as technology continues to advance and unlock new realms of computational power. The synergy between these two components will remain at the heart of this computational revolution.