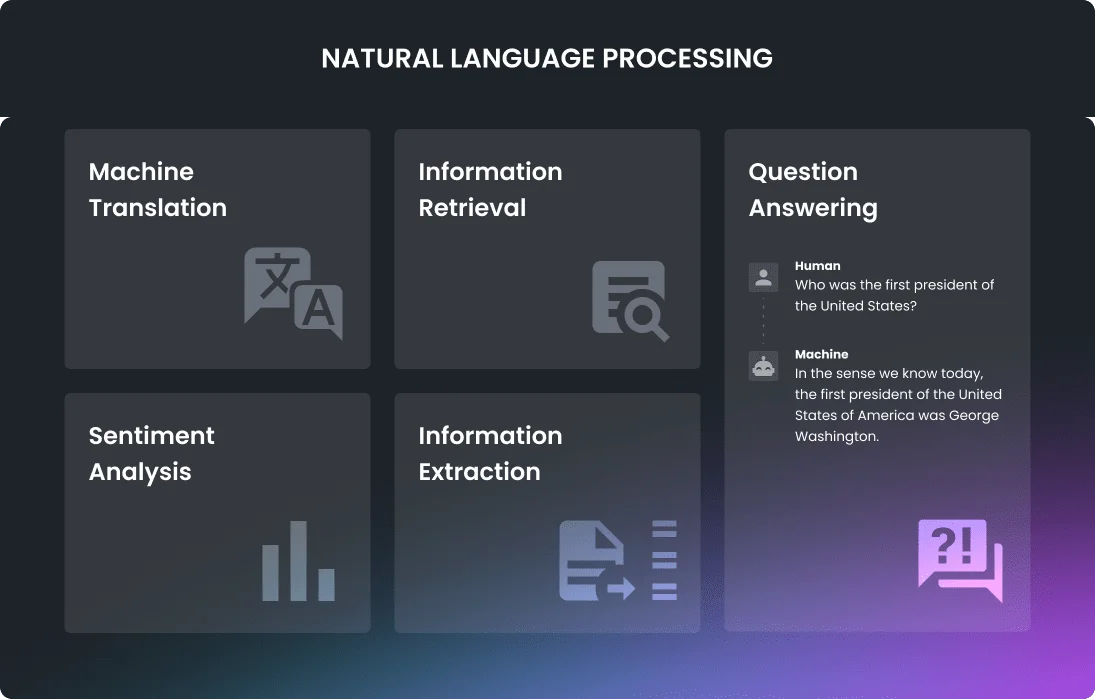

Natural Language Processing (NLP) is a field of artificial intelligence that focuses on enabling machines to understand, interpret, and generate human language. From chatbots and sentiment analysis to language translation and text summarization, NLP has found applications in various domains. However, despite its growing popularity and success stories, NLP projects come with their fair share of challenges. In this blog post, we’ll delve into some of the most common obstacles faced in NLP projects and provide insights on how to overcome them.

- Data Quality and Quantity

One of the fundamental challenges in NLP is acquiring high-quality data. Natural language is complex, nuanced, and often ambiguous. To train NLP models effectively, you need large and diverse datasets. However, gathering such data can be expensive and time-consuming. Moreover, ensuring data quality, accuracy, and consistency is a constant struggle.

How to Overcome It:

Invest in data preprocessing: Clean, preprocess, and annotate your data meticulously to remove noise and inconsistencies.

Augmentation techniques: Use data augmentation methods to increase the size of your dataset by introducing variations to existing data.

Transfer learning: Leverage pre-trained models on large, publicly available datasets like BERT or GPT to bootstrap your NLP tasks.

- Domain-specific Challenges

NLP projects often need to deal with domain-specific jargon, terminology, and context. Building models that can understand specialized language can be challenging, especially when the available training data is limited.

How to Overcome It:

Domain expertise: Collaborate with subject matter experts who understand the nuances of the specific domain you’re working on.

Custom embeddings: Train word embeddings or use pre-trained embeddings specific to your domain to improve model performance.

Data collection: Collect domain-specific data or fine-tune pre-trained models on relevant texts to adapt them to your specific context.

- Model Selection and Tuning

Choosing the right NLP model architecture and fine-tuning it for your specific task is crucial for project success. However, there’s no one-size-fits-all model, and the optimal architecture can vary depending on the problem at hand.

How to Overcome It:

Experimentation: Try multiple models and hyperparameter settings to find the one that performs best on your validation data.

Transfer learning: Consider using transfer learning techniques to leverage pre-trained models as a starting point and fine-tune them for your task.

Model ensembles: Combine the predictions of multiple models to improve performance and robustness.

- Ethical and Bias Concerns

NLP models can inadvertently perpetuate biases present in their training data, leading to biased or unfair outcomes. Addressing ethical concerns and mitigating biases in NLP projects is a critical aspect of development.

How to Overcome It:

Bias assessment: Regularly assess your model for biases and fairness using tools and metrics designed for this purpose.

Diverse data: Ensure your training data is diverse and representative of the population to reduce bias.

Fairness-aware algorithms: Explore fairness-aware machine learning techniques to reduce discrimination in your models.

- Resource Intensiveness

NLP projects often require substantial computational resources, including powerful GPUs and significant memory. This can be a barrier, especially for small teams or organizations with budget constraints.

How to Overcome It:

Cloud resources: Utilize cloud-based services that offer scalable computing resources, such as AWS, Google Cloud, or Azure.

Model optimization: Implement model compression techniques to reduce the size and resource requirements of your NLP models.

Distributed computing: Parallelize and distribute tasks across multiple machines or GPUs to speed up training.

- Evaluation Metrics

Choosing appropriate evaluation metrics for NLP tasks can be tricky. Metrics like accuracy may not capture the nuances of language understanding, leading to suboptimal model assessments.

How to Overcome It:

Task-specific metrics: Define and use metrics that are specific to your NLP task, such as BLEU score for machine translation or F1 score for named entity recognition.

Human evaluation: Conduct human evaluations to assess the quality of your model’s outputs, especially for tasks that involve subjective judgments.

Conclusion

Natural Language Processing projects hold immense potential but come with their own set of challenges. From data quality and model selection to ethical considerations, NLP practitioners must navigate these hurdles strategically. By adopting the recommended strategies and staying up-to-date with the latest developments in the field, you can overcome these challenges and build NLP applications that make a real impact.

Remember, NLP is a rapidly evolving field, and the solutions to today’s challenges may become obsolete tomorrow. Continuous learning and adaptation are key to success in NLP projects.