Deep learning has revolutionized the world of artificial intelligence, powering everything from voice assistants to autonomous vehicles. At the heart of this revolution are neural networks. In this blog post, we’ll take a deep dive into the building blocks of neural networks, demystify their inner workings, and explore their incredible applications.

Introduction

Artificial neural networks, inspired by the human brain, have become the cornerstone of modern machine learning and deep learning. These networks are versatile, powerful, and capable of solving a wide range of complex tasks, from image recognition to natural language processing. To truly understand the capabilities and limitations of deep learning, it’s essential to grasp the fundamental concepts that underpin neural networks.

In this Neural Networks 101 guide, we’ll cover the basics, starting with the structure of a neural network and moving on to key concepts such as neurons, layers, and activation functions. By the end of this journey, you’ll have a solid foundation to explore more advanced topics in deep learning.

The Neural Network Structure

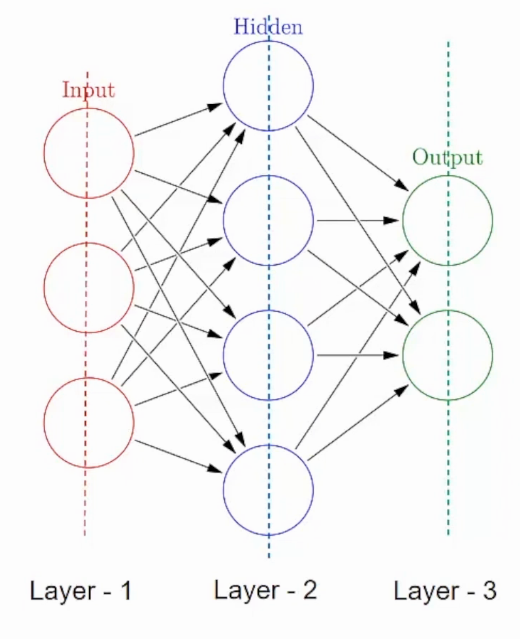

At its core, a neural network is composed of interconnected layers of neurons. These neurons, also known as nodes or units, mimic the neurons in our brains. The network receives input data, processes it through these layers, and produces an output.

The three fundamental types of layers in a neural network are:

Input Layer: This is where the network receives its initial input data. Each neuron in the input layer represents a feature or dimension of the input data.

Hidden Layers: Between the input and output layers, there can be one or more hidden layers. These layers are responsible for extracting complex patterns and features from the input data.

Output Layer: The final layer produces the network’s output, which could be a classification, regression, or any other type of prediction, depending on the task.

Neurons and Activation Functions

Neurons are the computational units of a neural network. Each neuron receives inputs, performs a weighted sum of these inputs, and then applies an activation function to produce an output. The output is then passed to neurons in the next layer.

One crucial aspect of neural networks is the choice of activation function. Common activation functions include:

Sigmoid: Produces values between 0 and 1, often used in the output layer for binary classification problems.

ReLU (Rectified Linear Unit): Outputs the input for positive values and zero for negative values, widely used in hidden layers due to its efficiency.

TanH (Hyperbolic Tangent): Similar to the sigmoid but produces values between -1 and 1, also used in hidden layers.

The choice of activation function impacts the network’s ability to learn and generalize from data.

Training a Neural Network

The magic of neural networks lies in their ability to learn from data. Training a neural network involves the following steps:

Forward Propagation: During this phase, input data is fed into the network, and computations are performed layer by layer until the output is obtained.

Loss Calculation: The output is compared to the ground truth (the expected output), and a loss function measures the error or the difference between them.

Backpropagation: This is where the network learns from its mistakes. The gradients of the loss with respect to the model’s parameters (weights and biases) are computed, and the model’s parameters are updated to minimize the loss.

Optimization: Various optimization algorithms, such as Gradient Descent, are used to adjust the model’s parameters iteratively, aiming to reduce the loss and improve the network’s performance.

Repeat: Steps 1 to 4 are repeated for a predefined number of iterations or until the model converges to a satisfactory performance level.

Deep Learning Applications

The power of deep learning, fueled by neural networks, has led to groundbreaking advancements in various fields. Here are a few notable applications:

Image Recognition

Convolutional Neural Networks (CNNs), a specialized type of neural network, have revolutionized image recognition tasks. They are used in self-driving cars to identify objects on the road, in medical imaging for disease diagnosis, and in facial recognition systems.

Natural Language Processing

Recurrent Neural Networks (RNNs) and Transformer models have transformed the field of natural language processing. They enable machines to understand and generate human-like text, leading to chatbots, language translation, and sentiment analysis tools.

Autonomous Systems

Neural networks are at the core of autonomous systems, enabling robots and drones to navigate complex environments, make decisions, and even perform tasks like package delivery.

Healthcare

In healthcare, neural networks are used for disease prediction, drug discovery, and personalized treatment recommendations. They analyze vast amounts of medical data to improve patient outcomes.

Gaming

Neural networks power sophisticated game AI, making opponents in video games more challenging and adaptable to player actions.

Conclusion

Neural networks are the backbone of modern deep learning and artificial intelligence. Understanding their fundamental structure, the role of neurons and activation functions, and the training process is crucial for anyone venturing into this exciting field. As we continue to push the boundaries of what neural networks can achieve, the possibilities for innovation and discovery are boundless. Whether you’re a beginner or an experienced practitioner, mastering the building blocks of neural networks is a fundamental step toward unlocking their true potential.

In future posts, we’ll explore advanced topics in deep learning and delve into practical implementation. Stay tuned for more insights into the fascinating world of artificial intelligence and neural networks!